Intangible Interaction

- Your name

Dylan Dawkins

- Project title

Paint By Numbers

- A Summary of Your Project (1-2 sentences)

Paint by Numbers is a collaborative mural that gives children hospital patients a chance to show their creativity and family, friends, and strangers the opportunity to make that creativity a reality. Patients are each assigned a small part of the mural to draw on. That drawing is passed on to someone who has access to the large scale mural, and they fill out the corresponding section.

- Ideal Installation Venue

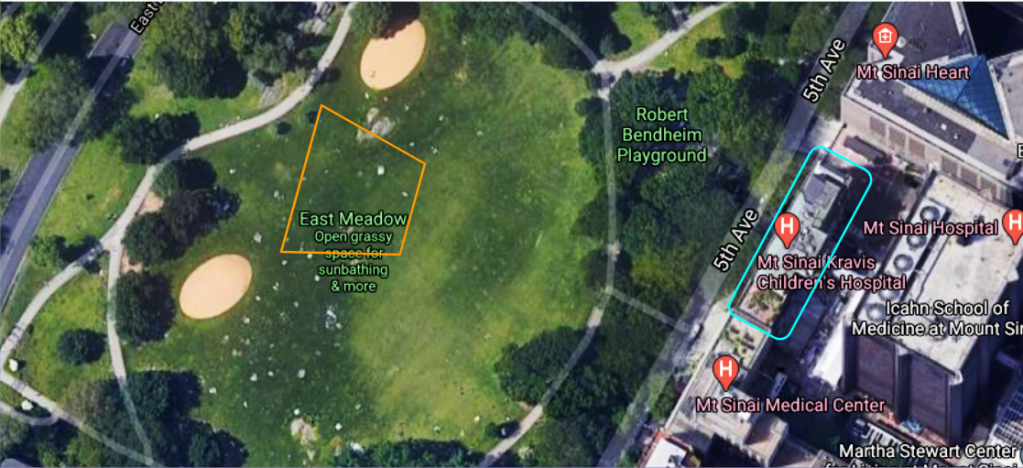

The Installation venue would be located in Central Part in a large grassy area in East Meadow across from Kravis Children’s Hospital - Background and Concept

This project was inspired by the uptick in arts related pandemic pastimes. Going to Walmart or Target and seeing all of the jigsaw puzzles cleaned out and seeing many posts of people painting and using coloring books, I was inspired to ask the question, what if this was something we could collectively do. It was also inspired by the hospital experience. For kids in long-term hospital care, sometimes going outside and doing creative activities is a challenge, I wanted to give them an opportunity to make something that would make a beautiful mark outside of their hospital room. Examples of similar projects before include Epcot’s Paint By Numbers activity during the Festival of the Arts.

- Project Description

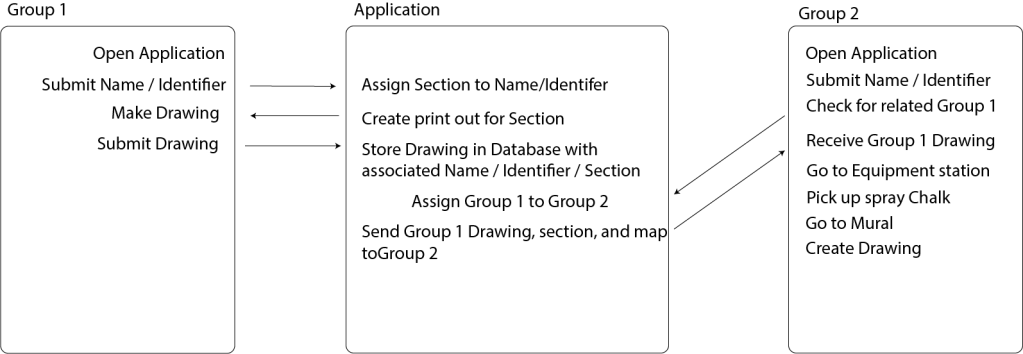

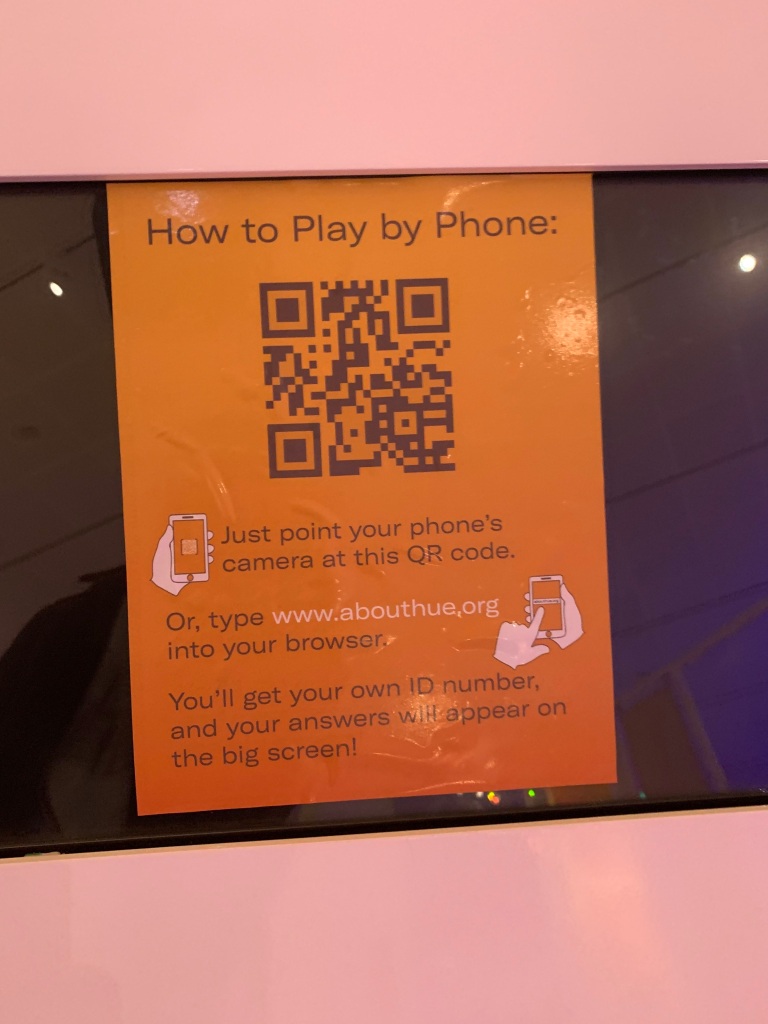

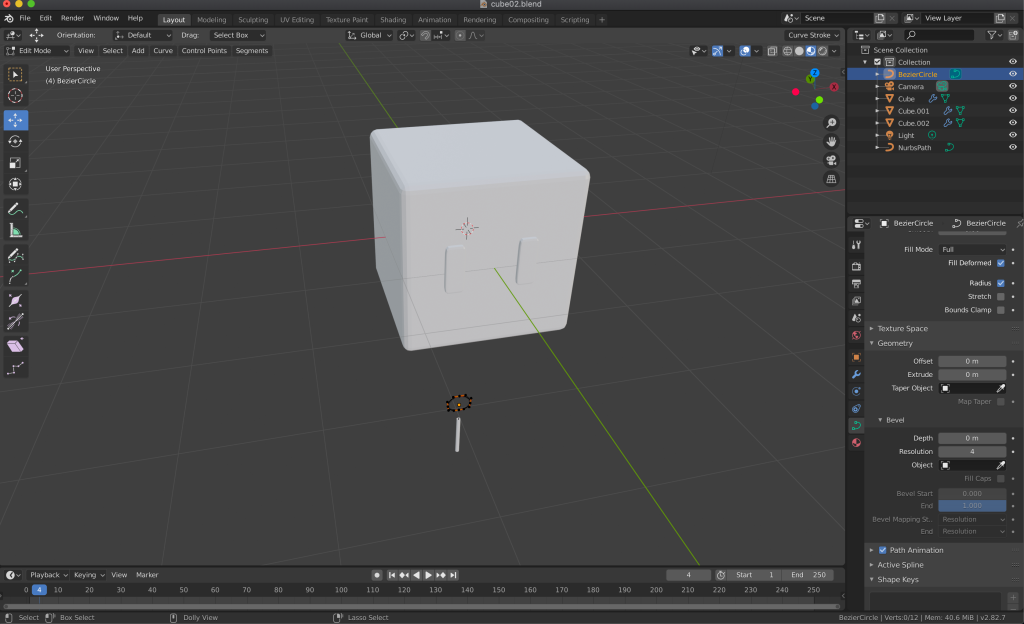

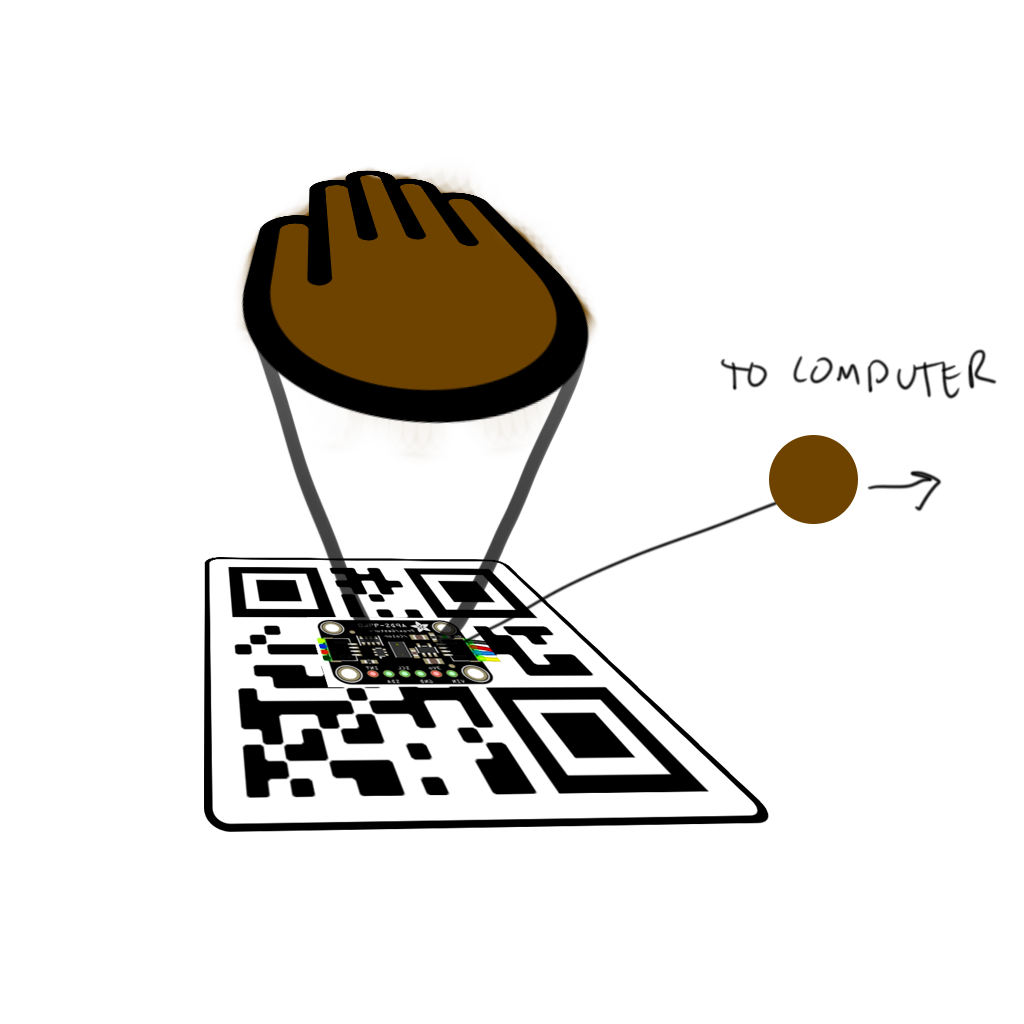

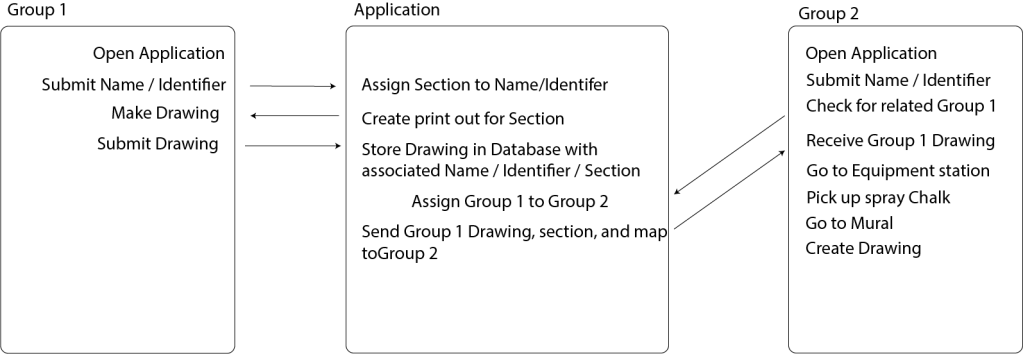

This project is a paint by numbers on a gigantic scale. The project is split up into three parts: the set up, participant group one, and participant group two. The set up involves the creation of a mural outline both in digital format and on the Central Park grass, this will serve as a template from which participants in group one and group two can work. Each section of the mural will be numbered and assigned to patients who are in the park-facing rooms at the hospital (or in long-term care rooms). These patients make up group one of the participants. They can use four colors – light red, light yellow, light blue, and white – to create their own drawing within the lines of the section. This will be accomplished by either using their device to draw or by using a sheet of paper with a print out of their assigned section and some colored pencils. Once these are saved, the project will move to participant group two. Participant group two can be either the family/friends/associates of a member of group one, or someone who signs up to take part in this project. They will receive the drawing of the related group one participant if they are the former or a random drawing from group one if they are in the latter. Depending on the colors used, participants from this group will be given a set of spray chalk and a time slot to go to the mural and paint out the drawing. In the end, The mural will be filled with chalk drawings by the different children in the hospital as interpreted by the friends/family or by the random stranger. Paint by Numbers is a different approach to the idea of sensors and how sensors like cameras interpreting data. Like a camera which converts light particles from 3D dimensional space into a grid of pixels in 2D space this project thinks about how people might act as sensors and reinterpret light and color and recreate it.

- Audience Interaction

There are three levels of audience interaction. The first two levels are described in the above description, the third would be the passersby in Central park would see the giant mural on the grass and wonder why it was there. They would not interact with the mural itself but there would be a poster that is next to the mural that would explain its purpose and give them an opportunity to be a part of the second group. Additional interactions include the children from group one being brought to the roof of the Hospital at the time slot their corresponding group two members are set to draw their piece of the mural. They can see their time drawing come to life. The app would also allow the group two members to share pictures of their drawing with the child from group one.

- Social Impact

There are a few different ways I want to impact people with this installation. The first is that I want people to feel like they can add creativity to the world. Closely related to that, I want them to feel that by working together that creativity can become even larger. For the child it is seeing their tiny painting become a bigger one made by their family or friends or someone they never met. And also for the others it is seeing their bigger painting become a part of something even larger. I want to instill a sense of community and the fact that with collaboration and connection we can build amazing things that have our own unique touch too.

- Sketch / 3D Drawing / Photo / Installation Plan

- System Diagram

- Prototype to Show the Proof of Concept

LIVE DEMO

- Budget

- Additional Information

Presentation link: https://docs.google.com/presentation/d/1Jw6rVzdfkDsn7XraQncjXLZ26PSpGB0iBLgmFzAGHcA/edit?usp=sharing